Even if the big name has changed, the old saying itself still stands: “no CTO was ever fired for buying IBM”…Ī lot has happened since then though, and with the highest valued software IPO ever, I believe companies will consider it more seriously.īut did AWS Redshift actually catch up with Snowflake in the meantime? Could it be the edge is now gone? Redshift was a safer approach, I totally get it. If Snowflake gets acquired by XYZ, what then?”), and they also found it very hard to establish a watertight budget with it’s pay-per-use pricing. They were usually worried about potential takeovers ( a well-known CTO said to me once “I want to remain fully on AWS. Its lacking of a large cloud vendor backing, and with a totally different pricing strategy, made CTOs nervous. įor years, I have been known to praise Snowflake to all my clients, but even if the advantages were clear, I always found it to be hard sell unfortunately. It did, and then some… You can read all about my experience with Snowflake in the section My Personal Experience with Snowflake of this article. I am naturally suspicious of new technology, and the rest of the team was just the same, so we carried out several tests to prove this technology was worthy. I came across it for the first time in 2016, when our data manager suggested we tried it for our new data warehouse. Snowflake was publicly announced in 2014, less than 2 years after Amazon Redshift’s release, but its growth was more of a slow burner. This year, they finally released the machine that can make Redshift a worthy competitor of Snowflake: the RA3.xlplus at $1.2/hour. Much more affordable, but probably still not quite there for most.

Then, in Mar-2020 they released the ability to pause your cluster, and straight after the first “affordable” RA3 machine is announced, the ra3.4xlarge charged at $3.6/hour. This meant that it was really not a viable option ( financially) for most Redshift customers.

However, though a great step in the right direction, there was still a (very expensive) problem: only one machine type was available (rs3.16xlarge) and charged at $14 per hour, meant the cost was prohibitive for most customers. This meant storage was no longer cheap…Īfter multiple attempts to mitigate these issues (Redshift Spectrum in 2017 Redshift Elastic Resize in 2018), Amazon finally releases a much awaited new architecture in Dec-2019: the Redshift RA3 clusters, which used S3 distributed storage as a way to finally decouple storage from compute. Scaling-out compute was slow and a true admin task, as it implied data replication and/or redistribution, and growing the disk capacity implied more disks, which implied more servers. Or those who required very large machines for the overnight ETL, but not throughout the day, and wanted to be able to scale accordingly.īoth these problems were, at their core, an overall architecture design “feature” (or maybe flaw): Redshift’s share-nothing architecture did in fact share something, the server barebone.

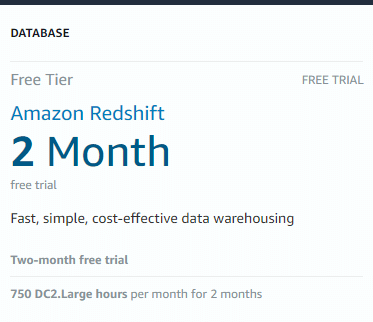

This was a problem for a lot of companies who perhaps had a lot of data, but didn’t need dozens of servers worth of compute power. A lot of good features were added through the years, but the two biggest drawbacks remained: the coupling between storage and compute, and how hard (and disruptive) it is to scale. Years have passed and companies became more demanding, being fast and on the cloud was no longer enough. All the above made AWS Redshift the perfect solution for many companies back then, and many more ever since. Put altogether, this was quite an appealing offering.Īnd let’s not forget that, back then, if you had (or planned on having) big data, you would probably be “forced” down the Hadoop rabbit hole, which was still in its early days and still very much on-prem focused. And then, the cherry on top: using pure SQL. So what was the fuss all about? In my opinion, this is one of those situations where the whole is bigger than the sum of its parts.Ī massively parallel platform, capable of handling terabytes of data just as well as gigabytes, built for analytics, managed and hosted on the cloud, charged by the hour, which you could scale out on demand. In reality, Redshift itself was built using ParAcell’s technology, itself a MPP database. It was not the first platform targeting analytics, and clearly not the first massively parallel platform (MPP) database, which probably was Teradata (or maybe Vertica?). When Amazon released Redshift back in 2013, it was quite a game changer. Latest and more affordable RA3.xlplus nodes released in December 2020, Amazon News Amazon Redshift

0 kommentar(er)

0 kommentar(er)